My only substantial comment re: constructor changes: you implicitly assume throughout this change that this.currentLine can never be null.

We should either nuke the zero-argument Parser constructor, since nobody uses it, or add some logic to ensure that if stream != null and file != null, then this.currentLine is initialized to... something. Otherwise it'll blow up immediately in parse() when you try to get the first token to compare against "script".

That's what I wanted to do (the "nuking the zero-argument constructor" part).

But that zero-argument version is used for the "default" value of AshRuntime's "parser" field, which, itself, is clearly assumed to never be null.

So it's just a matter of "Parser can't/isn't allowed to parse anything if the zero-argument version was used to initialize it"

This shouldn't be hard to enforce, since, of the parsing methods, only Parser.parse is public.

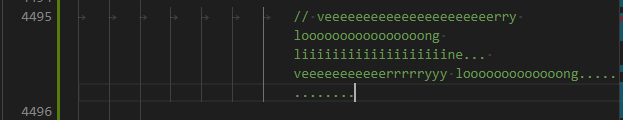

Diff:

+ Parser parser = new Parser( scriptFile, null, this.imports );

+ Scope result = parser.parseScope( scope, null, null, scope.getParentScope(), false, false );

+ if ( parser.currentLine.nextLine != null )

{

These could probably be final.

This project really doesn't fancy the use of *final* on variables (not fields (even then...)), so I tried to avoid peppering too many of it...

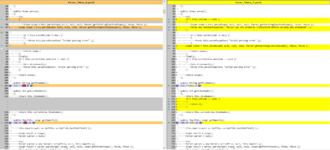

Diff:

@@ -653,4 +643,4 @@

if ( this.currentToken() == null )

{

- throw this.parseException( "}", "EOF" );

+ throw this.parseException( "}", this.currentToken() );

}

Hm. There are a few cases like this where you modify an error message

that'll never get run. I guess that's because parseException no longer takes (String, String).

Correct.

Diff:

@@ -1438,6 +1428,11 @@

private boolean parseIdentifier( final String identifier )

{

+ if ( identifier == null )

+ {

+ return false;

+ }

+

if ( !Character.isLetter( identifier.charAt( 0 ) ) && identifier.charAt( 0 ) != '_' )

{

return false;

@@ -1456,6 +1451,11 @@

private boolean parseScopedIdentifier( final String identifier )

{

+ if ( identifier == null )

+ {

+ return false;

+ }

+

if ( !Character.isLetter( identifier.charAt( 0 ) ) && identifier.charAt( 0 ) != '_' )

{

return false;

Safety checks are good, I suppose. Are these reachable by targeted scripts?

No, these checks are not

currently reachable.

The only way for this method to receive null is if it is supplied Parser.nextToken()

- the null check in parseScopedIdentifier is pretty much impossible to reach. It's only put there so to conform with parseIdentifier.

- the null check in parseIdentifier is *currently* unused, but *will* be used. Twice.

Once for a work-in-progress feature of the language server.

Once for a check that should currently be done, but isn't (bug): at the start of Parser.parseFor.

Diff:

@@ -1697,5 +1698,10 @@

while ( this.currentToken() != null && !this.currentToken().equals( "}" ) )

{

+ this.currentToken = null;

+ this.currentLine.tokens.removeLast();

+

+ final String line = this.restOfLine();

+

try

{

We're... dropping tokens in cli_execute { ... } blocks? Am I reading this correctly?

The two first lines (which I really should have turned into a method. Something like "eraseUnreadTokens()"), isn't "dropping" tokens.

-When not null,

Parser.currentToken contains a token that was *not yet read*.

-A token, the moment it is created, instantly gets added to the end of it's Line's

tokens field. Even though it's not read yet.

Imagine you have

Code:

1- cli_execute

2- {

3- something, something else, another action, some other command...

4- }

and we are at line 3.

By calling

Parser.currentToken() to check for the presence of "}", we are marking "something" as a token.

If we were to continue without removing it, the line would have two tokens:

"something" and ",something else, another action, some other command...".

We don't want that. We want a single token: "something, something else, another action, some other command..."

Nothing was read. Nothing was discarded.

I believe this Line.restOfLine() call causes one of the crashes I mentioned down

the line, although that's no worse than the current state.

As mentioned previously, the null checks are what's coming in the next patch, which include the addition of end-of-file checks anywhere about to use Parser.restOfLine().

Diff:

@@ -1710,8 +1716,12 @@

StaticEntity.printStackTrace( e );

}

- this.currentLine = "";

- this.fixLines();

+ if ( line.length() > 0 )

+ {

+ this.currentLine.makeToken( line.length() );

+ }

+ this.currentLine = this.currentLine.nextLine;

+ this.currentIndex = this.currentLine.offset;

}

if ( this.currentToken() == null )

(context: parseBasicScript) We're... turning each line into a token? This seems

to be consistent with existing behavior (where we return if/when we find a

closing brace on its own line. Seems fragile, though.

Again, remember that this is not all just to parse a script. This is for a language server, and the language server needs *everything* to be in tokens (even comments).

Here, for example, the lines inside cli_execute {...} blocks will be marked as "string"s (well, "macro"s...), so that language clients can color them accordingly with Semantic Tokens/Semantic Highlighting.

Diff:

@@ -3193,6 +3203,8 @@

else

{

+ Token anchor = this.currentToken();

+

Type baseType = this.parseType( scope, true, false );

if ( baseType != null && baseType.getBaseType() instanceof AggregateType )

{

@@ -3207,7 +3219,7 @@

{

if ( baseType != null )

{

- this.replaceToken( baseType.name );

+ this.rewindBackTo( anchor );

}

if ( ( result = this.parseVariableReference( scope ) ) != null )

{

To confirm: you need the rewinding logic because you may have parsed multiple

tokens while trying to parse an aggregate type?

Incorrect. If that was an aggregate type, no rewinding happens.

The rewinding happens if the type is a simple type, like int, boolean, string, buffer, location, bounty, etc.

It most likely is always only going to be a single "word".

We need to rewind because the "word" was *read*, and we don't want that (because the only thing we accept at the point is an aggregate literal, or a variable reference/call with a

variable.function() syntax).

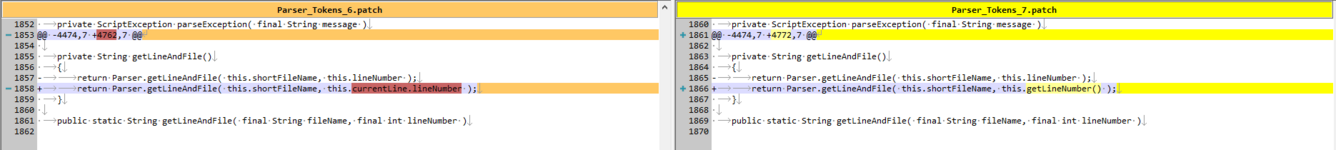

Diff:

@@ -3320,10 +3332,11 @@

private Value parseString( final BasicScope scope, Type type )

{

+ this.currentToken = null;

+ this.currentLine.tokens.removeLast();

+

Which token are we getting rid of? The opening quote?

Either way, this is something that I'm seeing a bunch of times and might be

clearer if you defined it as a helper method such as `removeLastToken()`.

Bonus points for comments indicating which tokens are being removed.

(._. )

Diff:

@@ -3346,22 +3359,39 @@

Concatenate conc = null;

StringBuilder resultString = new StringBuilder();

- for ( int i = 0; ; ++i )

+ for ( int i = 1; ; ++i )

{

- if ( i == this.currentLine.length() )

+ final String line = this.restOfLine();

+

+ if ( i == line.length() )

{

+ if ( i == 0 && this.currentIndex == this.currentLine.offset )

+ {

+ // Empty lines are OK.

+ this.currentLine = this.currentLine.nextLine;

+ this.currentIndex = this.currentLine.offset;

+ i = -1;

+ continue;

+ }

+

Can you help me understand what case the last if-statement is checking?

Something about an empty line? When does it trigger?

this:

Code:

string x = "abc\

def\

\

ghi\

jkl";

This string will read:

"abc

def

ghi

jkl".

You don't generate an error if you don't put a \ on every line.

(this was previously automatically done by Parser.fixLines() )